In a tech landscape dominated by massive AI models with billions of parameters, Small Language Models (SLMs) are carving out a strategic and highly practical space. With model sizes typically ranging from a few million to a few billion parameters, SLMs offer compact, efficient solutions for text generation, classification, summarization, and more. For marketers, this translates to faster, cheaper, and more targeted AI applications without the infrastructure burden of larger models.

SLMs are not just a lightweight alternative to Large Language Models (LLMs). They represent a purposeful evolution—streamlined models fine-tuned for specific, high-value use cases. From content automation to personalization, campaign optimization to customer support, SLMs are opening up powerful new capabilities for marketing teams of all sizes.

What Are Small Language Models (SLMs)?

Small Language Models are a class of AI models optimized for performance in targeted language tasks using fewer parameters than LLMs. While LLMs like GPT-4 or Claude 3 may run with hundreds of billions of parameters, SLMs typically fall below 10 billion. In many cases, they operate in the 1 to 4 billion range, and some even go below that—down to a few hundred million parameters.

This reduced size makes SLMs faster and cheaper to run, easier to deploy on edge devices or standard servers, and more accessible to non-enterprise teams. Despite their smaller footprint, SLMs still leverage the same core transformer architecture that powers LLMs. They achieve efficiency gains through techniques like pruning (removing unnecessary parameters), quantization (reducing numerical precision), and knowledge distillation (training smaller models to mimic larger ones).

Examples of current SLMs include Microsoft’s Phi-3.5-Mini (3.8B parameters), Qwen2.5-1.5B, Llama3.2-1B, and SmolLM2-1.7B, among others.

Why Are SLMs Gaining Traction?

Several macro and operational factors are fueling the rise of SLMs, particularly in industries like marketing that rely heavily on efficient, repeatable workflows.

Efficiency and Cost-Effectiveness

SLMs can be trained and fine-tuned with significantly less compute, which reduces infrastructure costs. They can often run inference on CPUs or low-power GPUs, and they don’t require expensive cloud deployments. This makes them appealing to small and mid-sized marketing teams.

Speed and Latency

SLMs offer faster inference times than LLMs, making them ideal for real-time applications like chatbots, auto-reply systems, and product recommendation engines.

Domain Focus and Precision

SLMs can be fine-tuned on specific verticals or functions, reducing the likelihood of hallucinations and ensuring relevance in outputs. For marketers, this means more brand-consistent messaging with fewer QA loops.

Privacy and Control

By deploying SLMs locally or in secure environments, organizations maintain tighter control over sensitive data. This is especially important for compliance with privacy regulations like GDPR or HIPAA.

Scalability Without Cost Explosion

With their lighter footprint, multiple SLMs can be deployed across various touchpoints—each specialized for a specific task—without overwhelming operational budgets.

SLMs in Marketing: Use Cases and Practical Impact

Content Generation

One of the clearest use cases for SLMs is automating content at scale. SLMs can generate product descriptions, landing page copy, social media posts, email subject lines, and meta descriptions—all aligned to brand tone if properly fine-tuned. Because they operate efficiently, they can be integrated directly into marketing workflows for near-instant output.

Hyper-Personalization

SLMs can be fine-tuned on customer segment data, behavior analytics, or vertical-specific language to create hyper-personalized messaging. Whether it’s individualized email campaigns, dynamic website experiences, or tailored chatbot responses, SLMs improve customer engagement without the cost or latency of calling an LLM API.

Customer Support and Chatbots

For companies offering real-time support, SLMs power chatbots and virtual assistants that respond accurately and immediately. Their ability to run on local infrastructure makes them ideal for private customer interactions, especially in regulated industries. They can answer FAQs, guide users through onboarding, and resolve simple product queries with minimal human intervention.

Sentiment and Feedback Analysis

SLMs excel at sentiment classification and can be used to analyze customer reviews, social media mentions, or survey responses. With proper training data, they can extract trends, identify emerging issues, and feed insights back into the marketing optimization loop. They are fast and efficient enough to make real-time analysis a practical reality.

Compliance and Data Privacy

Because SLMs can be deployed without needing to send data to external servers, they reduce the privacy risks associated with cloud-based LLMs. This makes them well-suited for industries like healthcare, finance, and insurance, where regulatory compliance is non-negotiable. Marketers in these sectors gain AI capabilities without compromising security or trust.

Operational Advantages of SLMs in Marketing

Cost Reduction

SLMs reduce costs across training, deployment, and inference. Businesses avoid the need for expensive GPUs or high-volume API calls to proprietary LLM services.

Deployment Flexibility

SLMs can be embedded in websites, mobile apps, CRMs, and other marketing systems with minimal effort. They are easier to maintain and faster to update than larger models.

Lower Error Rates in Domain Tasks

Fine-tuned SLMs trained on company-specific data often perform better in targeted tasks compared to general-purpose LLMs, which may produce inaccurate or verbose outputs.

Rapid Iteration

Because of their size, SLMs can be trained and deployed in a fraction of the time it takes to fine-tune an LLM. This enables faster experimentation, iteration, and campaign adaptation.

Distributed Intelligence

Multiple SLMs can operate in parallel across different functions. For example, one model might handle email personalization, while another focuses on product recommendation or sentiment classification—each optimized for its own task.

Strategic Fit: SLMs in Hybrid AI Stacks

Many marketing teams are now adopting hybrid AI stacks. In this model, SLMs handle specific, operational workloads—copy generation, chatbot responses, feedback analysis—while LLMs are reserved for creative brainstorming, long-form content, or high-level strategy support.

This blended approach allows organizations to get the best of both worlds: cost-effective precision for day-to-day execution and flexible intelligence when needed.

Small Language Models (SLMs) FAQs:

1. What distinguishes Small Language Models (SLMs) from Large Language Models (LLMs)?

SLMs have far fewer parameters—typically millions to a few billion—while LLMs can exceed 100 billion. SLMs are faster, cheaper to deploy, and easier to fine-tune for domain-specific tasks. LLMs, while more flexible, require significantly more compute and budget.

2. How do SLMs reduce hallucinations compared to LLMs?

Because SLMs are typically fine-tuned on narrow, well-curated datasets, they stay more focused on specific tasks and are less prone to generating false or irrelevant information. In contrast, LLMs often draw from broader and noisier training data, increasing the chance of hallucinated outputs—especially in specialized business contexts.

3. Which marketing tasks are best handled by SLMs versus LLMs?

SLMs are ideal for high-volume, repeatable marketing tasks like product descriptions, email copy, chatbot replies, sentiment classification, or review summarization. LLMs are better suited for tasks requiring open-ended reasoning, brainstorming, or content ideation across multiple verticals. A hybrid strategy often delivers the best results.

4. What cost and latency savings can I expect from SLM deployment?

SLMs require significantly less compute to run—in some cases, 10x to 100x less—resulting in lower cloud costs or even zero infrastructure expense if deployed locally. Inference speeds are faster, often by several seconds per query compared to LLMs, which translates to better real-time user experiences and lower wait times.

5. How can I fine-tune an SLM to match my brand voice?

Start with a base SLM and use a dataset consisting of your brand’s existing content—email campaigns, landing page copy, ads, or social posts. Use supervised fine-tuning or instruction tuning, depending on the model architecture. Many open-source SLM frameworks support this, and training can often be completed with standard hardware or minimal cloud spend.

6. What privacy benefits do SLMs offer for customer data use?

SLMs can be deployed on-premises or on edge devices, meaning sensitive customer data doesn’t have to leave your environment. This eliminates reliance on third-party APIs, enhances data governance, and aligns with compliance standards like GDPR, HIPAA, or CCPA. For marketers in regulated industries, this local control is a major advantage.

7. Can SLMs be deployed on local devices?

Yes. Many SLMs are designed specifically for on-device or edge deployment, including smartphones, laptops, or even embedded systems. This makes them ideal for low-latency applications, especially in settings where cloud connectivity is limited or cost-prohibitive.

8. Do SLMs hallucinate less than LLMs?

Yes. SLMs, when trained or fine-tuned on task-specific datasets, tend to produce more consistent and accurate results. Their narrower focus means fewer off-topic or fabricated responses—making them more reliable for customer-facing messaging.

9. What limitations do SLMs have?

SLMs are less capable in open-domain reasoning, long-form generation, or tasks requiring general world knowledge. They’re also more susceptible to overfitting if the training data is too narrow or unbalanced. However, their performance improves significantly when applied within a clear domain and with solid fine-tuning.

10. How should marketers choose between SLMs and LLMs?

Choose SLMs for efficiency, scale, and privacy when handling structured or domain-specific content. Choose LLMs when you need creative expansion, exploration across topics, or tasks that benefit from deeper general knowledge. Most effective AI stacks today blend both types based on task complexity and business goals.

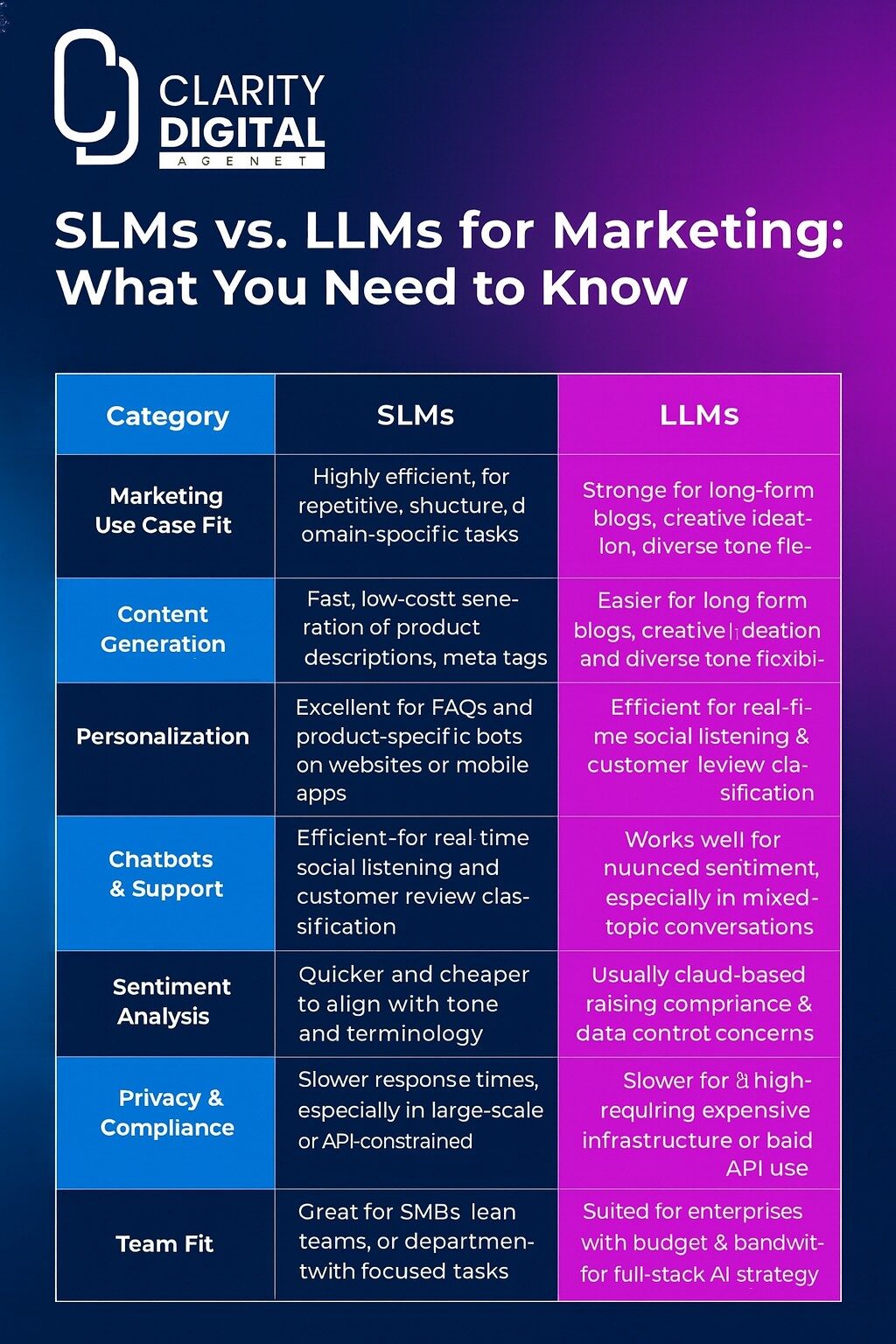

SLMs vs. LLMs for Marketing: Comparison Table

| Category | SLMs (Small Language Models) | LLMs (Large Language Models) |

|---|---|---|

| Marketing Use Case Fit | Highly efficient for repetitive, structured, and domain-specific tasks | Stronger for open-ended, cross-domain, or creative brainstorming tasks |

| Content Generation | Fast, low-cost generation of product descriptions, meta tags, email copy | Better for long-form blogs, creative ideation, and diverse tone flexibility |

| Personalization | Easy to fine-tune per customer segment for emails, CTAs, site experiences | Can personalize at scale but requires more compute and orchestration |

| Chatbots & Support | Excellent for FAQs and product-specific bots on websites or mobile apps | Can support broader, more general-purpose assistants (at higher cost) |

| Sentiment Analysis | Efficient for real-time social listening and customer review classification | Works well for nuanced sentiment, especially in mixed-topic conversations |

| Brand Voice Training | Quicker and cheaper to align with tone and terminology | Requires more data and effort to enforce brand consistency |

| Privacy & Compliance | Runs on-prem or edge, keeping customer data local | Usually cloud-based—raising compliance and data control concerns |

| Speed & Latency | Sub-second inference, ideal for live content or interactive UX | Slower response times, especially in large-scale or API-constrained settings |

| Cost of Deployment | Low—can run on standard devices or internal servers | High—requires expensive infrastructure or paid API use |

| Team Fit | Great for SMBs, lean teams, or departments with focused tasks | Suited for enterprises with budget and bandwidth for full-stack AI strategy |

| Best For | Marketers needing automation, privacy, and targeted scale | Marketers exploring high-volume, open-ended, or experimental AI use cases |

You may also download the table above by saving the image below:

Ready to Explore Your Marketing’s AI-Readiness or How SLMs Fit Into Your Marketing Stack?

At Clarity Digital, we help brands assess and implement AI tools that actually move the needle. If you’re exploring how Small Language Models can streamline your content, personalization, or customer engagement strategies, let’s talk.

Book a free 30-minute consultation with our team to assess your AI readiness and identify the right entry points for your marketing goals.